Uninstall F Secure Xfence For Mac

As a kid, the only OS I was aware of was Windows. Once, my computer was infected to the point where it was almost unusable. A more experienced friend suggested a non-free antivirus and the CCleaner.

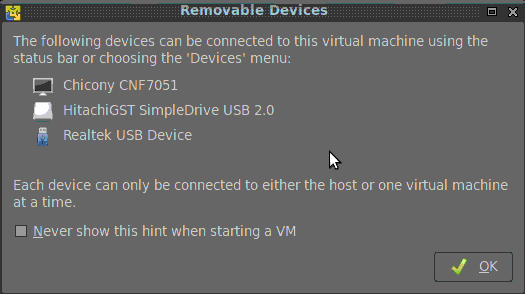

Run the following commands to delete the F-Secure XFENCE kernel extension and rebuild the cache: rm -rf '/Volumes/Macintosh HD/Library/Extensions/XFENCE.kext” kextcache –u /Volumes/Macintosh HD. Reboot the computer, and then run the F-Secure XFENCE uninstall script to clean up.

After a lot of effort, I could get my machine back to working, but it became so slow that it led me to discover Linux. Now, on a Windows 10 machine, I’ve nothing but Defender, and since the aforementioned experience I’ve never had to use any other antivirus, a ‘junk’ cleaner, etc. Once bitten, twice shy:)Edit: I hated investing in anti-stuff. CCleaner is also essential for cleaning up poorly uninstalled apps leaving registry keys lingering around.

It's also great for when you need to fully reset and reinstall a misbehaving app, such as Symantec Endpoint Protection, which fails to clean up several critical registry keys that it checks during installation, and then refuses to re-install if they exist. Sure, you could hunt them down and write a script for Symantec, but CCleaner does it for all apps. I've fixed so many terrible apps and installs using CCleaner, it's an absolute necessity.That and RevoUinstaller. You run RevoUinstaller then CCleaner, and maybe a reboot in between, and you've really removed an app.

(The paid version of Revo is even cooler, as it will let you log the files generated during an install, and then on uninstall, it'll make sure to remove all the files and directories you had previously logged.). I use CCleaner for maybe even 10 years, a great tool.But Windows 10 (a few older versions as well, but somewhat hidden) has something similar called 'Disk Cleanup' (Win key enter 'Disk Cleanup' 'Clean up system files' Check all marks).

'Disk Cleanup' removes stuff that CCleaner doesn't, so I use them together.You can even remove system restore and shadow copies (Win key enter 'Disk Cleanup' 'Clean up system files' 'More Options' tab 'System Restore and Shadow Copies' 'Clean up' button). Definitely test drive a couple of Linux distributions. I do so periodically and love seeing the progress.There are really only two things keeping me on Windows.1. I run some Windows-only applications that don't work with Wine.2.

A lot of Linux software often doesn't work well on a hi-dpi monitors.Aside from that, since Windows 7 (and later versions of Vista), Windows is fine. Web queries with excel for mac. IMHO, operating systems aren't terribly interesting these days.

Everything interesting is in applications on top of the operating system. Windows, macOS, Linux? If the application I need runs on that operating system, then I can be productive. it led me to discover LinuxAs someone who is the same boat but hasn't discovered Linux yet, how hard would you say it would be to install a version of Linux that support 3 monitors (using an onboard ATI card and a PCIe card)?

Last time I tried installing it this is the part I gave up at.Also as a person always been aware of Window I find the graphics in Ubuntu lacking some finesse, i.e. The scrollbars, window panes look like that created using Java applets (granted it is a matter of taste and personal choice) but what is the most professional looking desktop to try right now?Sorry i know this is off-topic but I too really want to switch from windows and don't want to waste money in Macbooks so any advice would be appreciated.

The boring answer is that if you have a graphics card with good Linux support, you're going to have a good experience. Otherwise probably less good.

So without knowing the specifics of your graphics hardware it's impossible to give a clear answer.People generally don't fault MacOS for not supporting whatever hardware they bodged together, yet they expect Linux to work with anything because hackers and magic. I guess that's a good reputation to have but it's not very useful if you want something that just works.In the case of Linux, what just works is generally the drivers that are built in to the system, with vendors that take an active part in Linux development.

And since Intel started to make both network and graphics cards, that's usually your best bet. ATI is generally usable too, but performance and power consumption generally lag their Windows equivalent drivers.With that said, it's quite easy to test. Download the latest Fedora (or Ubuntu) live DVD and boot it. If that works with your display, a native install is going to work too.Just don't install Linux as an almost-Windows or cheap-MacOS. If you want Windows software then that's what you should run it on. Run Linux because you want a UNIX desktop and the kind of software that goes with it (gcc, bash, rsync, native TCP/IP utilities and those things). I recommend KDE, it feels a bit more well put together for a desktop these days.With regards to your question about triple monitors.

I mean, I managed to get triple monitors working on FreeBSD without much fuss so I am not sure of how much help I can be when I say 'it should just work'. I am obviously biased.But, it's likely that it will just work, the open source drivers for AMD are significantly superior to the open source drivers for nVidia. Although I only have nVidia cards running and I have 2 setups with triple monitors. AMD's APUs are actually more powerful than almost all Intel iGPUs. The only one that's competitive is Iris Pro 580 with the eDRAM addon, known in a previous incarnation as Crystalwell (not all Iris Pro 580s have this).The tradeoff is that the CPU half of AMD's processors is kinda bad - they are all derived from either Jaguar laptop processors or Bulldozer, they desperately need to be refreshed with Ryzen.

So Intel has the better CPU but a mediocre GPU (unless you splash out for Crystalwell), and AMD has mediocre CPUs but a good GPU.AMD's non-APU processors (FX, Ryzen, TR, Epyc) do not have iGPUs however, same goes for Intel's lines of server chips (X99/X299/etc). This is something that is only in 'client' processors.

how hard would you say it would be to install a version of Linux that support 3 monitors (using an onboard ATI card and a PCIe card)?As a data point, the development desktop I'm using runs 3 monitors on Linux (Fedora 25). They're hooked into a single AMD R9 390 graphics card (overkill, but it was hanging around spare when putting together the pc).The monitors are 1x Dell 30' in the middle, with 2x ASUS 24' monitors (one each side). No HiDPI stuff, and everything works fairly well. Using the 'Xfce' spin of Fedora.Didn't need to do any complicated setup. The monitors were all detected fine without mucking around, and I just needed to drag them into position in the Xfce4 gui tool so it knew which one was on the left, middle, right. Lacking details, so nobody can promise anything, but I have run my current desktop with three monitors on two video cards. I currently run it with two monitors and two cards, with one bound to a Windows VM.

That is somewhat trickier to set up, but multiple monitors should be fine.My advice echoes others - you can try before you buy (install) with a few flavors of Linux; see what happens. If you have trouble, try to find a fellow human who can help - X is a little surprising to configure, if you haven't done it before. I don't know.

I have been on Xubuntu for about 4 years now, and love it. Just got a new touchscreen laptop with win10 on it and hate the experience. Switched the wife over to Xubuntu as her win10 laptop was running like crap, and she barely notices any difference in the experience except the Linux system is faster.I think for a lot of people who want to just browse the Web and watch movies, Linux is more than up to the task. And suit, recently even libreoffice is working fine for my simple needs for word processing and spreadsheet work.

There has been a number of cases of installers from trusted developers being infected lately. (For example Transmission being infected twice.)On our side (developers) we need to be careful with this idea that 'we will know' when something is wrong and be more careful when deploying software. It would also be nice if some form of tool could be used to test a binary to make sure it only contains what it should contain (sort of a whitelist of symbol names compared to the source files, idk.) I'm sure something along these lines probably exists for some different purpose. First of all, this is why reproducible builds (getting bit-for-bit identical binaries independent of the machine used to build the software) is something we should be putting much more work into. The Debian folks are doing an amazing job there.Another important point is that distributions have already solved effectively all of these problems.

We have automated building and signing systems that mean that installation and upgrades are done in ways that are not vulnerable even to fairly sophisticated attacks. You can build packages locally if you want to verify them, and modifying a package after it has been built invalidates the signature that all modern package managers require before installing a package.

As part of the openSUSE project we even have a free-to-use (and free as in freedom) build project called the Open Build Service1 which allows you to build packages (with automated dependency update rebuilds) for many different distributions (Arch Linux, Debian, Ubuntu, Fedora, RHEL and obviously openSUSE and SLES).I get that distributions aren't 'sexy' but it's getting quite frustrating seeing all these communities make the same mistakes that distributions made (and learned from) more than 20 years ago.Disclaimer: I work for SUSE and am an openSUSE community member.1. As I posted above, we have a build service that does this automatically for you. It takes maybe 30 minutes to create a few spec files, and now it's supported by a bunch of different distributions. You can then point your users at the repo (or mirror the repo if you prefer). Also OBS lets you create forks of projects that just inherit the unmodified packages (and said forks can also be cross-host so you don't need to self-host a whole distribution).I agree that doing everything I mentioned manually is hard, that's again why I said that distributions have solved this problem and made it easy.

But who would use the build service? The consumer of the software or the publisher?Publisher. A user could (if they were really paranoid) rebuild the packages locally, with two or three commands.

Also that protects you against malware injecting binaries in an executable when compiling it, but not from malware injecting code into the source code of the executable.You can download the source code that OBS used (both as a src RPM generated by the builder and the OBS repo that the builder was given read-only access to), and OBS supports cryptographic signatures of the originating source (with gpg-offline keys to avoid WoT attacks). If your developers are using sane source control practices (use GPG keys for every commit, but especially tags) then you are protected against that too.Of course, reproducible builds is something that would solve this problem even better (protecting against attacks on OBS that cause it to add source that are not in the repo). As a side point, our threat model doesn't fully trust the nodes compiling the software so such attacks are fairly limited in scope (but I'm not a developer of OBS so I'm really not the right person to be asked these questions). Debian packages are signed.

It is hard to make software distribution completely secure. But, one could imagine creating pretty strong guarantees. Imagine that you have N completely different parties/machines (isolated, on different infrastructure, different user accounts).- (N-1) machines build a new Debian package.- The (N-1) results are fetched by the fourth party.- The Nth party checks whether the build results are identical.- The Nth party signs the result with GPG on a machine that is not connected.- The signed package is distributed.An attacker would need to compromise either: (1) N-1 build machines; (2) the offline machine used for signing packages; or (3) the upstream source.I consider (3) to be the most serious thread. But in this scenario, only the distribution packagers need to inspect the source changes. In the common scenario where you download from a vendor (e.g. The Transmission or CCleaner website), every user has to inspect a binary blob.Another possibility would be to have strong sandboxing for applications. An application could still participate in DDoS attacks, etc.

But it would at least not encrypt/destroy your data.tl;dr: reproducible builds are an extremely important development and Debian (and other distributions participating in this initiative) should be commended for their work! I'm not sure how exactly these infections work, but one method would be to infect the developers' PCs. In which case you essentially can't trust anything.

You'd need some kind of byzantine fault tolerance (mandatory multi-person code review?) to be sure nothing like this ever happensWhat makes this scary is that, as far as I know, pretty much no software has that kind of security, and there are several pieces of widely used software that always update automatically (sometimes for good reason, sometimes not so much). Why doesn't MS Windows do the maintenance - CCleaner does things like clean up ancient cache files, remove Windows update files, remove registry entries for software that's no longer installed.That sort of maintenance seems like it's the result of poor design in an OS that has the hood welded shut.I actually used ccleaner on Win 10 recently, an MS update had associated loads of files with TWINUI which wasn't installed making things like viewing images impossible. Ccleaner found of the order of thousands of stale entries, removed them and made a backup. It also let me simply check and disable startup programs - I don't think Win 10 has a way to do that in the user UI? Why doesn't MS Windows do the maintenance - CCleaner does things like clean up ancient cache files, remove Windows update files,Windows 10 can already do this by default.

remove registry entries for software that's no longer installed.Uninstalling old style Windows software is a hard problem that generally can't be done in a foolproof way, without potentially breaking stuff, due to bad legacy design decisions like giving programs free reign to install stuff wherever they want, without a proper application model nor dependency tracking. Therefore, registry cleaners are also prone to break things. That sort of maintenance seems like it's the result of poor design in an OS that has the hood welded shut.That's what UWP solves.

It also let me simply check and disable startup programsThis is already in the Windows 10 UI. Given the presence of this compilation artifact as well as the fact that the binary was digitally signed using a valid certificate issued to the software developer, it is likely that an external attacker compromised a portion of their development or build environment and leveraged that access to insert malware into the CCleaner build that was released and hosted by the organization.Did you read the article? It can happen to any company. It just so happens they targeted a very popular downloaded application.

Who knows what other software installers have been compromised. I wish there was more technical information, even the advisory is unclear here.The CCleaner installer is always 32 bit for compatibility - it installs both 32 bit and 64 bit program binaries. On 64 bit systems, the default shortcuts are to the 64 bit binary.So was the 32 bit installer compromised, or only the 32 bit binary? The original advisory makes references to the installer which is quite confusing. Tried to figure it out myself but I assume the loader has VM detection techniques as I wasn't able to infect a VM.

Wow that's crazy, I was on the toilet just this morning running CCleaner on my phone as I do weekly and the thought momentarily crossed my mind that I shouldn't trust CCleaner on my phone (I limit my use of apps as much as I can) but my next immediate thought was, 'Nah, this is Piriform we're talking about, they're one of the few free software developers I can probably trust to never inject malware into their products.' I mean, I guess that's still true since the build was compomised by an outside party, but it's still just an interesting moment of synchronicity. Edit: Updating with their release blogpost instead, as it's clearer:Release Post:Affected Versions:This compromise only affected customers with the 32-bit version of the v5.33.6162 of CCleaner and the v1.07.3191 of CCleaner Cloud. No other Piriform or CCleaner products were affected. We encourage all users of the 32-bit version of CCleaner v5.33.6162 to download v5.34 here: download. We apologize and are taking extra measures to ensure this does not happen again.macOS seems fine, it looks like it was their 32bit Windows/Cloud offerings:Before delving into the technical details, let me say that the threat has now been resolved in the sense that the rogue server is down, other potential servers are out of the control of the attacker, and we’re moving all existing CCleaner v5.33.6162 users to the latest version.

Users of CCleaner Cloud version 1.07.3191 have received an automatic update. In other words, to the best of our knowledge, we were able to disarm the threat before it was able to do any harm.So if you have a Windows copy, look for a patch I guess. Seems like it's not just fixed, but the rogue server taken down.

F-Secure is an advanced detection and protection technology that concentrates on protecting PCs’ from modern cyber threats, including virus, spyware, worms, malicious email attachments, malware, and so on. To uninstall these files, you have to purchase licensed version of Reimage Reimage uninstall software.If you cannot uninstall F-Secure Anti-Virus because its uninstaller does not occur in Programs and Features or because the uninstaller fails to remove the whole pack of the program, we would recommend using a professional software removal utility.For this purpose, you can use any well evaluated third-party removal tool currently available on the market. To minimize the search time, we would recommend using. Download the program that you prefer and run the setup file. Once installed, set the program to uninstall F-Secure anti-virus and reboot the PC once done. Use F-Secure Uninstaller. To uninstall these files, you have to purchase licensed version of Reimage Reimage uninstall software.F-Secure has released an official uninstaller tool called F-Secure Uninstallation Tool 3.0.

It is capable of removing and cleaning up F-Secure Service Platform, F-Secure Protection Services for Consumers and Business, F-Secure Anti-Virus, F-Secure Internet Security and F-Secure Technology Preview from workstations.Download the tool by clicking on. Then extract the ZIP archive and run the UninstallationTool.exe file. Once the removal is done, reboot your PC. Uninstall F-Secure manually. To uninstall these files, you have to purchase licensed version of Reimage Reimage uninstall software.If F-Secure uninstaller is available in Control Panel or Settings, try uninstalling it in a usual way:.

Open Task Manager and check if the F-Secure process is running. If you found it, right-click on it and select Disable. Then locate program’s icon on Windows taskbar, right-click on it, and select Exit. Once done, press Windows key + I and open Apps section. (Windows 10).

Look for F-Secure entry, click on it, and select Uninstall. Alternatively, you can access Control Panel and open Programs & Features. The F-Secure uninstall is normally included in the list.

Click on it and select Uninstall. To remove the leftovers, press Windows key + R, type regedit, and press Enter. Then click Edit at the top of the window and select Find.

Enter F-Secure in the search box and click Find Next. Remove all entries detected. Finally, reboot your PC. Reimage - a patented specialized Windows repair program. It will diagnose your damaged PC.

It will scan all System Files, DLLs and Registry Keys that have been damaged by security threats. Reimage - a patented specialized Mac OS X repair program. It will diagnose your damaged computer. It will scan all System Files and Registry Keys that have been damaged by security threats. This patented repair process uses a database of 25 million components that can replace any damaged or missing file on user's computer. To repair damaged system, you have to purchase the licensed version of malware removal tool. Alice Woods- Shares the knowledge about computer protectionAlice Woods is a security expert who specializes in cyber threat investigation and analysis.

Her mission on Ugetfix - to share the knowledge and help users to protect their computers from malicious programs.References. Filip Kafka. Internet security news, views and insight.

Cale Hunt, Rich Edmonds. Featuring news, reviews, help & tips, buyer guides, forums and accessories. Cat Ellis.

The source for tech buying advice.